What is Live Low Latency?

Low Latency in live streaming is the time delay between an event’s content being captured at one end of the media delivery chain and played out to a user at the other end. Consider a goal scored at a football game: Live latency is the delay in time between the moment a goal is scored and captured by a camera until the moment that a viewer sees the goal on their own device. There are a few different terms that effectively define the same experience: end-to-end latency, hand-waving latency, or glass-to-glass latency.

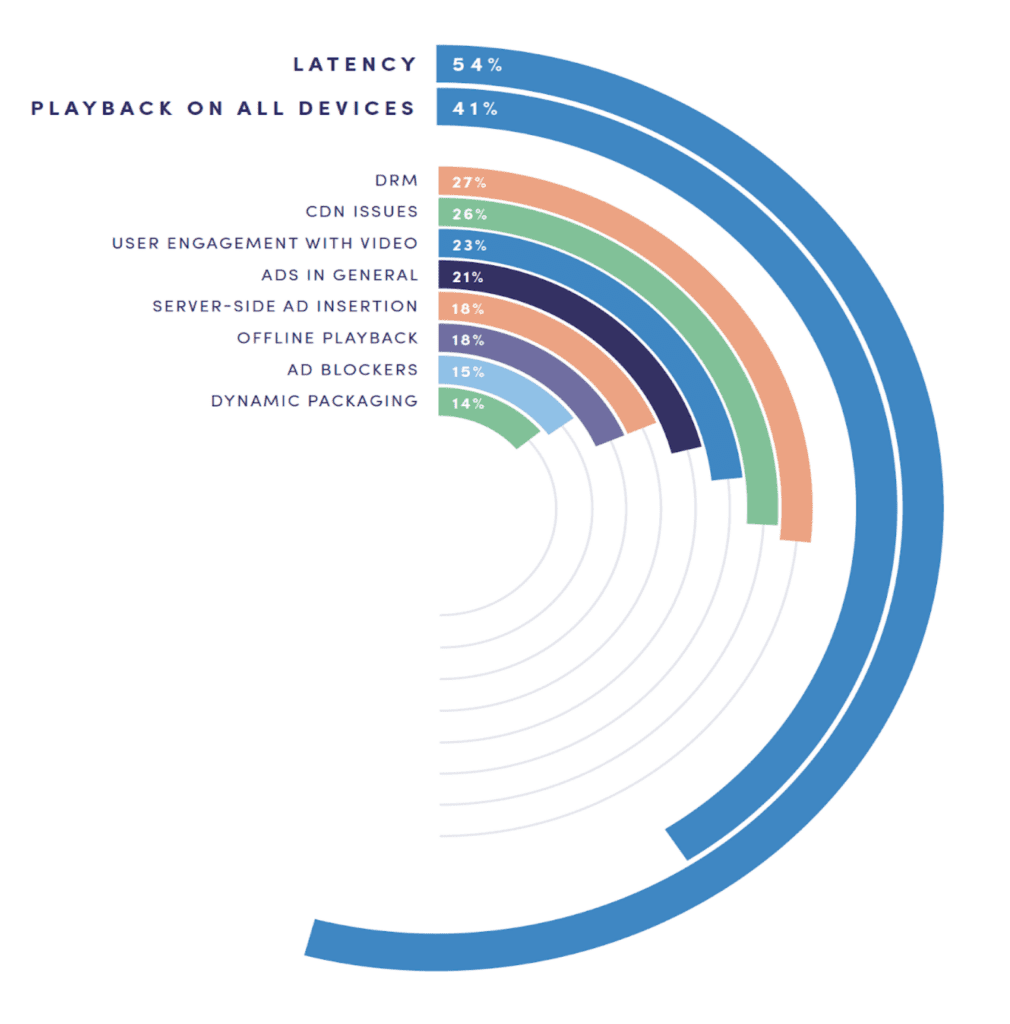

In our most recent developer report, low latency was identified as one of the biggest challenges for the media industry. This blog series will take an in-depth look into why that’s the case, welcome to our Live Latency Deep Dive series!

Why care about Low Latency?

Most use cases where live latency is crucial can be categorized into the following:

Live content delivered across multiple distribution channels

high live latency in comparison to traditional linear broadcast delivery via satellite, terrestrial or cable services. Over-the-top (OTT) delivery methods like MPEG-DASH and Apple HLS have become the defacto standard for delivering video to audiences using mobile devices such as smartphones, tablets, laptops, and Smart TVs. Live network content, like sports or news, drive the need for low live latency as these networks attempt to deliver content simultaneously over various distribution means (e.g. OTT vs Cable).

Picture a scenario where you are streaming your favorite football team playing in the global final, your neighbor and equal fan (with incredibly thin walls) has traditional linear cable. It’s the final moments of the game, but you hear the neighbor cursing loudly, despite the fact that there is well over 1 minute left in the game. The thrill is spoiled and you know your team certainly lost. The need for faster live latency becomes clear, the difference between broadcast and streaming is unacceptable in today’s digital world. But a lot of factors affect how quickly content will appear on a viewer’s screen. Aside from infrastructural issues (like not being optimized for low latency), modern streaming methods may suffer latency delays from additional factors like social media feeds, push notifications, and second-screen experiences running in parallel to the live event.

Interactive live content

Whenever audience interaction is involved, live latency should be as low as possible to ensure a good quality of experience (QoE). Such use cases include webinars, auctions, user-generated content where the broadcaster interacts with the audience (e.g. Twitch, Periscope, Facebook Live, etc.) and more. Latency is often measured on a spectrum, where high latency is the least sought after delay, and Real-Time is the most sought after. See the Latency Spectrum below (including the latency types, delay time, and streaming formats):

The latency spectrum shows that unoptimized OTT delivery accounts for around 30+ seconds of delay while cable broadcast TV clocks in at around 5 seconds – give or take. Furthermore, sub-second latencies may not be achievable with OTT methods and require other protocols like WebRTC.

Where does live latency come from?

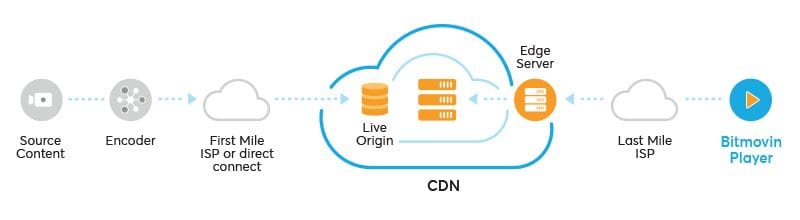

First, a slightly more technical definition of live latency: It’s the time difference between a video frame being captured and the moment it’s presented to the playback client. In other words, it’s the time that a video frame spends in the media processing and delivery chain. Every component in the chain introduces a certain amount of latency and eventually accumulates to what is considered live latency.

Let’s have a look at the main sources of live latency:

Buffering ahead for playback stability at the player-level

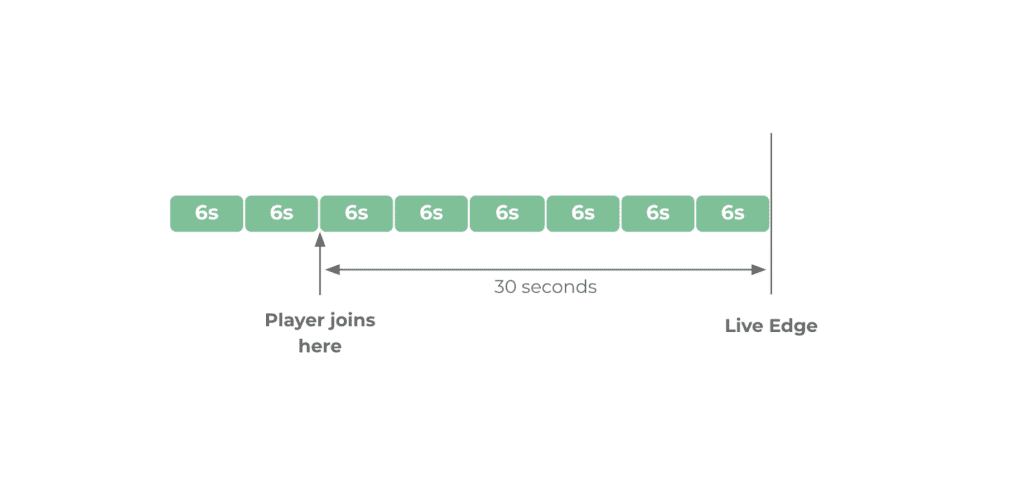

A video player will aim to maintain a pre-defined amount of buffered data ahead of its playback position. The standard value is about 30 seconds of buffer loaded ahead at all times during playback. One of the reasons behind this is the cause is that if network bandwidth drops during playback there would still be 30 seconds of data to be played out without interruption. During this time the player can react to new bandwidth conditions appropriately, thereby buying the player some time to adapt. Buffer time also typically influences the bitrate adaptation decisions as low buffer levels may imply more aggressive downwards adaptations.

However, when aiming for 30 seconds of buffer with a live stream, the player must stay at least 30 seconds behind the live edge (the most recent point) of the stream with its playback position; this would result in a live latency of 30 seconds. Conversely, this means that aiming for a low latency would require being even closer to the live edge and implies having a minimum buffer. If we aim for 5 seconds of latency, the player would have 5 seconds of buffer at most. Thus, the difficult decision of trading off between latency and playback stability must be made.

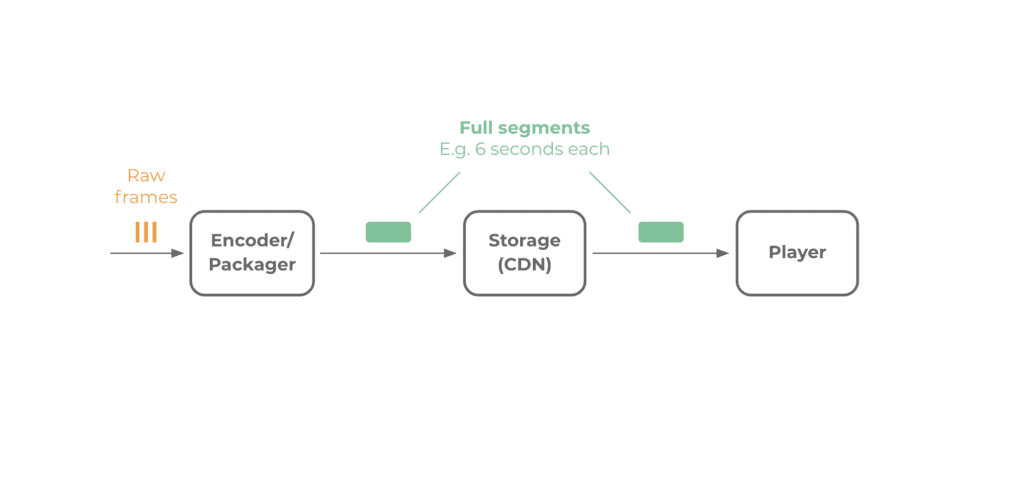

Segments are produced, transferred and consumed in their entirety

Live streams are encoded in real-time. This means that if a segment duration is 6 seconds it will take the encoder 6 seconds to produce one full segment. Additionally, if fragmented MP4 is used as the container format, encoders can only write a segment to the desired storage once it’s encoded completely, i.e. 6 seconds after starting the encode of the segment. So once a segment is transferred to the storage its oldest frame is already 6 seconds old. On the other side of the delivery chain, the player can only decode an fMP4 segment in its entirety and therefore needs to download a segment fully before it can process it. Network transfers: like uploading a video to a CDN origin server, transferring the content within the CDN, and downloading from the CDN edge server to the client can add to the overall latency to a lower degree.

In summary, the fact that segments are only processed and transferred in their entirety results in latency being correlated directly to segment duration.

What can we do?

Naive approach: Short segments

As latency is correlated to segment duration, a simple way to decrease latency would be to use short segments, e.g. 1-second duration. However, this comes with negative side effects such as:

- Video coding efficiency suffers: The requirement of each video segment starting with a key frame implies having small groups of pictures (GOPs). This in turn, causes the efficiency of differential/predictive coding to suffer. With short segments, you’d have to spend more bits if you’re aiming for the same perceptual quality as longer segments with the same content.

- More network requests and everything negative associated with them, e.g. time to first byte (TTFB) wasted on every request.

- Increased number of segments may decrease CDN caching efficiency.

- Buffer at the player grows in a jumpy fashion which increases the risk of playback stalls due to rebuffering.

Chunked encoding and transfer

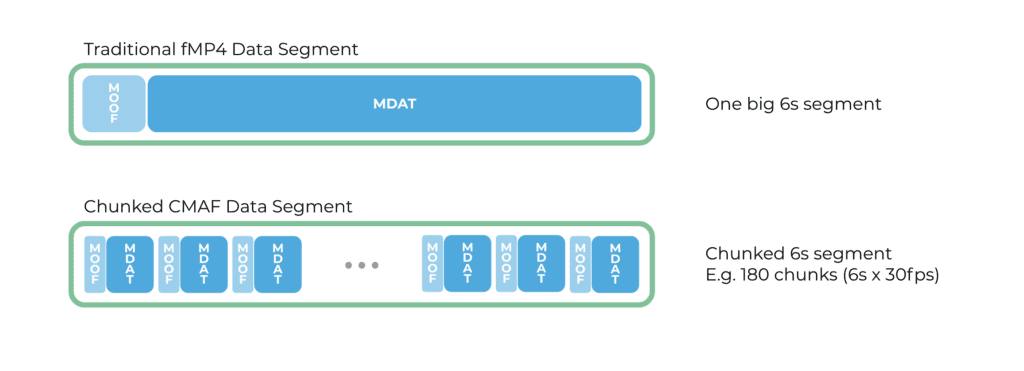

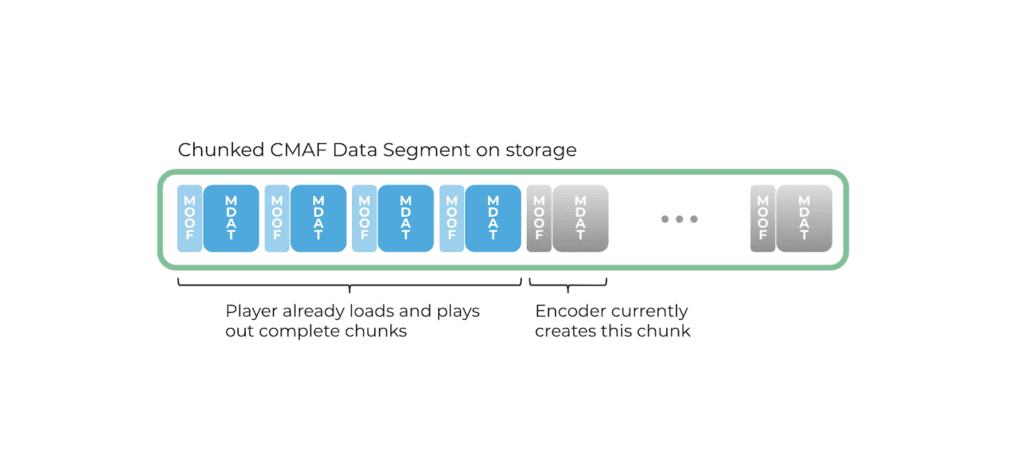

To solve the problem of segments being produced and consumed only in their entirety, we can make use of the chunked encoding scheme specified in the MPEG-CMAF (Common Media Application Format) standard. CMAF defines a container format based on the ISO Base Media File Format (ISO BMFF), similar to the MP4 container format, which is already widely supported by browsers and end devices. Within its chunked encoding feature, CMAF introduces the notion of CMAF chunks. Compared to an “ordinary” fMP4 segment that has its media payload in a single big mdat box, chunked CMAF allows segments to consist of a sequence of CMAF chunks (moof+mdat tuples). In extreme cases, every frame can be put into its own CMAF chunk. This enables the encoder to produce and the player’s decoder to consume segments in a chunk-by-chunk fashion instead of limiting use to entire segment consumption. Admittedly, the MPEG-TS container format offers similar properties as chunked CMAF, but it’s fading as a format for OTT due to the lack of native device and platform support that fMP4 and CMAF provide.

Chunked encoding on its own does not help us decrease the latency but is a key ingredient. To capitalize on chunked encodes, we need to combine the process with HTTP 1.1 chunked transfer encoding (CTE). CTE is a feature of HTTP that allows resource transfers where size is unknown at the time of transfer. It does so by transferring resources chunk-wise and signaling the end of a resource with a chunk of length 0. We can utilize CTE at the encoder to write CMAF chunks to the storage as soon as they are being produced without waiting for the encode of the full segment to finish. This enables the player to request (also using CTE) available CMAF chunks of a segment that is still being encoded and forward them as fast as possible to the decoder for playout. Therefore allowing playback as soon as the first CMAF chunk is received.

Implications of low latency chunked delivery

… besides enabling low latency:

- Smoother and less jumpy client buffer levels from the constant flow of CMAF chunks received. Thus lowering the risk of buffer underruns and improves playback stability.

- Faster stream startup (time to first frame) and seeking at the client due to being able to decode and playout segments partially during their download.

- Higher overhead in segment file size compared to non-chunked segments as a result of the additional metadata (moof boxes, mdat headers) introduced with chunked encodes.

- Low buffer levels at the client impact playback stability. A low live latency implies the client is playing close to the live edge and has a low buffer level. Therefore the longest achievable buffer level is limited by the current live latency. It’s a QoE tradeoff: low latency vs. playback stability.

- Bandwidth estimation for adaptive streaming at the client is hard. When loading a segment at the bleeding live edge, the download rate will be limited by the source/encoder. As content is produced in real-time it takes, for example, 6 seconds to encode a 6-second long segment. So the download rate/time for segments is no longer limited by networks but by encoders. This causes a problem in bandwidth estimation methods that are currently commonplace in the industry and based on the download duration. The standard formula to calculate bandwidth estimation is:

estimatedBW = segmentSize / downloadDuration

E.g.: estimatedBW = 1MB / 2s = 4mbit

As download duration roughly equals the segment duration when loading at the bleeding live edge using CTE, it can no longer be used to estimate client bandwidth. Bandwidth estimation is a crucial part of any adaptive streaming player and the lack of estimated bandwidth must be addressed. Research for better ways to estimate bandwidth in chunked low-latency delivery scenarios is ongoing in academia and throughout the streaming industry, e.g. ACTE.

Did you enjoy this post? Want to learn more? Check out Part two of the Low Latency series: Video Tech Deep-Dive: Live Low Latency Streaming Part 2

…or if you want to jump ahead, take a look at Part three: Video Tech Deep-Dive: Live Low Latency Streaming Part 3 – Low-Latency HLS